Summary of Key Points

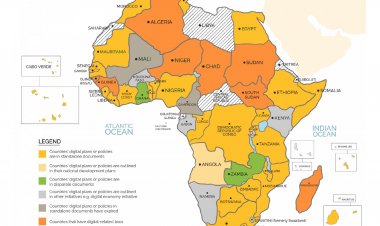

- Africa is playing a central role in the global AI supply chain, particularly in the early production phase. Countries, including Egypt, Rwanda and Mauritius, have published comprehensive AI strategies.

- Yet, foreign AI technologies dominate in the region, offering technological products and solutions that may not be compatible with local developmental priorities.

- To support local AI capacity favouring local economies and ecosystems, policy responses to AI should build on national digital agendas and prioritise inclusive digital, data and computing infrastructure and skills development.

- As can be seen in Egypt’s national AI strategy, unfavourable foreign agreements should not be taken up to support AI that is incompatible with realising inclusive developmental priorities.

- The implications of AI on gender equality must be carefully considered given the continent’s existing digital gender divide, the retrogression in gender equality and the advances in data-led economies that the pandemic has brought about.

- Lastly, the EU and the African region should explore a coordinated response to AI governance based on the shared commitment to protecting fundamental rights and freedoms.

Introduction

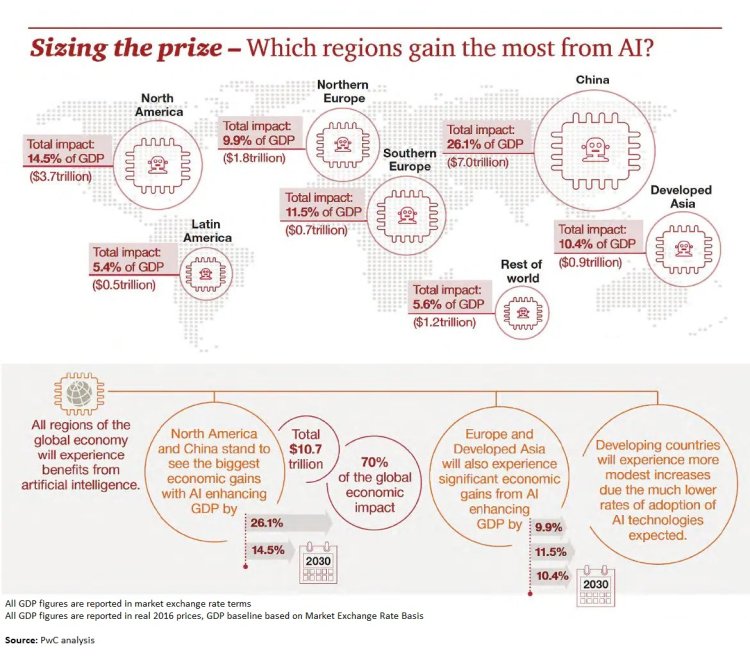

Artificial Intelligence (AI) is a principal policy concern globally. In 2017, Price Waterhouse Coopers published their seminal report, “Sizing the Prize”, which projected that, by 2030, AI would contribute US$15.7 trillion to the global economy. However, the distribution of the wealth and power afforded by AI technologies has been, to date, widely unequal. In PWC’s rendition of the future economy of AI – depicted in the figure below – the growth for the African continent is so slight it does not feature. Yet, given that AI production takes place at a planetary level, Africa is already playing a central role in developing AI systems that require the use and availability of natural resources, labour and skills from across the region. Despite the global reach of the AI supply chain, the benefits of these technologies have not been realised in the African region and are, instead, largely accrued by the Big Tech companies of the Global North and China and those that can afford the daily efficiencies offered by AI through smart speakers, such as Amazon’s Alexa, and smart cars.

Figure 1: Which regions will gain the most from AI

On the other hand, the African continent boasts a unique context that is well-positioned to take advantage of AI technologies to advance local socio-economic growth, including through the potential ability to leapfrog technological infrastructure associated with the Third Industrial Revolution and a growing and dynamic youth ready to adapt to new forms of digital work and entrepreneurship. African governments should prioritise adopting AI solutions that support the realisation of national developmental priorities and contribute to prosperous and inclusive societies.

Policy responses to AI are emerging across the continent, with Egypt, Mauritius and Rwanda being the first African countries to publish national AI strategies. However, Africa remains dominated by foreign technology and AI firms that do not necessarily support the realisation of national developmental priorities (such as those outlined in the UN 2030 Sustainable Development Goals). Or worse, they exacerbate exclusion and oppression for certain groups, particularly women. African policymakers must prioritise the growth of local AI capabilities and capacities that can be drawn on to meaningfully advance inclusive economic growth and social transformation. This prioritisation requires AI policy responses to build on national digital agendas and to foreground equitable access to digital, data and computing infrastructure.

In this brief, AI is broadly defined in order to account for the full AI life cycle and the harms and benefits that arise accordingly. The AI life cycle includes the raw materials and labour required to build the infrastructure and data systems on which AI is produced, through to the design, development, roll out and review of AI technologies in societies. Such technologies include machine-learning-based systems as well as broader data-driven systems and technologies, such as those used in digital ID and biometric programs.

This brief is structured in three sections that unpack a series of questions.

Section 1 explores six key AI-related issues (digital IDs and biometrics, facial recognition systems, social media and content takedown, click work, gig economies and foreign influences) in addressing the following:

- What role is the African continent currently playing in AI life cycles and supply chains?

- What concerns are arising for inclusion and local development, in particular, that require addressing through national policies or similar?

Section 2 provides an overview of AI policy frameworks published in Africa and emerging policy debates as they pertain to the region, responding to the following questions:

- What priorities are addressed in emerging AI policy frameworks in Africa and related policy documents?

- What is the relevance of the EU’s regulatory approaches to AI?

Lastly, Section 3 sets out recommendations to African policymakers for advancing beneficial AI systems locally, addressing the following question:

- What key considerations must African policymakers take into account in developing local, national and regional policy responses to AI?

AI in Africa: Emerging Technologies and Concerns

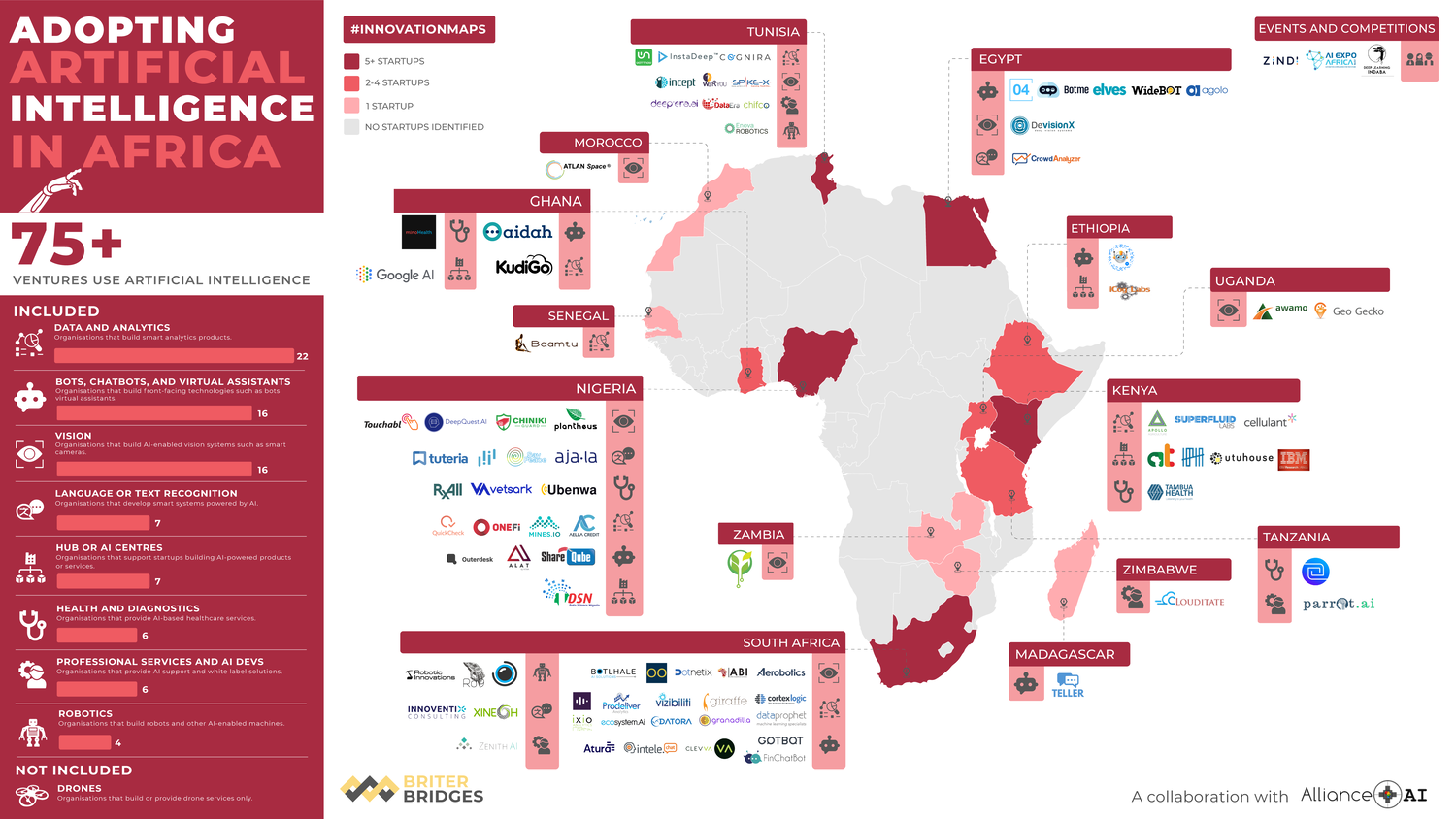

AI-driven systems and solutions are commonplace across the continent. In Kenya, locally designed AI solutions are being developed to assist farmers in procuring farming equipment or in making decisions about the optimal time to sow or yield certain crops. In Lagos, Nigeria, as in many other African cities, growing communities of young data scientists are coming together to refine their skills in machine learning and advance new AI-driven technologies and industries. At the continental level, each year the Deep Learning Indaba is held to support talent and leadership in AI and machine learning in Africa. An overview of some AI adoption on the continent is depicted in the figure below:

Figure 2: AI Start-Up Scene in Africa.

Yet, AI technologies pose real and potential threats to the societies involved in their design and construction and to those where the technologies are tested and used. This section explores some of the challenges being experienced in Africa in relation to the following six areas:

- Digital IDs and Biometrics

- Facial recognition systems

- Social media and content takedown

- Click work

- Gig economies

- Foreign influences

After exploring these challenges, the section proceeds to discuss the role of foreign governments and companies on AI in Africa.

AI Challenges in Africa

Digital IDs and Biometrics

Increasingly, digital ID systems, often encompassing biometric technologies that read facial signatures, fingerprints or iris scans, are being adopted by both government bodies and private companies in Africa. There are two key rationales that support the adoption of these technologies. First, to centralise and promote efficiencies across government services and prevent fraudulent social services claims and, second, to protect consumers against identity theft.

In Namibia, the leading internet services and telecommunications company, MTC, is rolling out an AI-driven digital ID verification system to facilitate customer access to its services. Once rolled out, the system will generate and store massive amounts of sensitive personal data – whether facial image, fingerprint or otherwise – which, given the service is being offered by a telecommunications provider, could be linked to other sensitive data such as geolocation. At present, the system is voluntarily available to customers, and little information has been published about how the data collected will be protected or who might access it.

In South Africa, a rather more problematic example of an AI-driven digital ID system was deployed in providing access to social grants for grant recipients. An estimated 18 million South Africans receive social grants, providing sorely needed social security for the country’s most vulnerable. In 2012, the national South African Social Security Agency (SASSA) contracted the company Cash Paymaster Services (CPS) to distribute social grants to beneficiaries through a digital ID system, which was later to encompass more advanced AI analytics. Net1, CPS’s parent company, had access to the data of all 18 million or so beneficiaries and used this data to develop targeted and predatory financial offers to these people. Given the relationship between Net1 and CPS, Net1 was able to deduct the loan repayments directly from the beneficiaries’ grant payments, resulting in individuals receiving little to no grant payment each month. The issue, heard over a series of court cases, demonstrated the unethical sharing of personal data of the country’s most vulnerable and South African communities’ critical lack of awareness of digital and information rights.

Facial Recognition Systems

Facial recognition technologies, another form of biometric AI, are a controversial technology used to verify individuals based on facial records and are increasingly being used within the digital ID and biometric systems described above. Most recently, Meta (the parent company of Facebook, Instagram and WhatsApp) prominently halted the use of facial recognition technologies on its platforms, owing to the technologies inaccuracies, which have been shown to be more widespread and problematic for women, gender minorities and non-white populations, in particular. The use of facial recognition technologies in African cities, from Kampala to Johannesburg, presents a series of issues where such technologies have been developed elsewhere and not trained on local facial data, in addition to the pressing privacy and human rights-related issues these technologies raise. In South Africa, Vumacam, a company that uses a Danish-built facial recognition system, has been establishing a comprehensive network of surveillance cameras designed to profile suspicious behaviour. These technologies have been proven to misread African faces and severely limit human rights – such as freedom of movement, association and the right to equality and fair treatment. Their use in a racially divisive country such as South Africa is highly risky and at odds with the country’s democratic vision of a transformed and equal society. In Uganda too, AI-powered facial recognition systems developed by Huawei were used in the 2020 elections to identify, track down and arrest supporters of the opposition leader, Bobi Wine.

Social Media and Content Takedown

The Big Tech foreign monopolies, which currently dominate in the African region, do not just threaten new entries to the market, particularly thanks to any first-mover advantage. The economic might of such companies, the foreign investment they bring (as is evident in the case of the Amazon Web Services (AWS) facility in Cape Town, see below) and their evasion of domestic laws can also negatively impact a government’s capacity to protect the rights of their citizens and those living within their borders.

Social media companies, which use AI to filter user content and detect and takedown inflammatory posts, are a good example of where domestic regulation across the world has not always been successful in holding such companies to account for harms caused as a result of their algorithmic decision making. Indeed, social media has a profound presence in many parts of Africa, where it is estimated that over 95 million people across the continent connect via Facebook each month. And, during the Ugandan elections of 2020, Twitter was the key platform through which Bobi Wine connected to his constituents and supporters. In the same year, the Nigerian government came under fire from the international community for banning Twitter following the social media company’s takedown of a post from the official presidential Twitter account of Nigerian President Muhammadu Buhari. The takedown practices of social media companies tend, however, to provide most coverage in Europe and North America, and the staff and protocols for African countries are thin on the ground, with limited local expertise and language skills. In writing about the episode, Nigerian journalist, Adaobi Tricia Nwaubani, notes that, despite any authoritarianism of the Nigerian government in banning Twitter, ‘the US-owned, private firm appeared to be interfering in the internal affairs of a sovereign African state without enough background knowledge to understand the consequences of its actions.

Click Work

With the increasing digitalisation and datafication of global society that is at the heart of the worldwide adoption of AI, the labour market – including that on the African continent – is rapidly transforming. Similar to historical exploits of the Global South, where multinational companies would seek out cheaper labour in Africa, Asia or South America, the global AI economy now demands a new form of precarious and insecure labour, which again can be more readily extracted from impoverished populations and communities. Micro digital labour, or click work, which has been well documented in scholarship and commentary on Amazon Mechanical Turk (a platform to crowdsource online work), involves labelling vast amounts of data and requires workers to simply have access to a computer. labourers must generally bid for such work, are paid a fractional amount per unit of work and are often subject to emotionally distressing content, such as labelling violent and disturbing images or videos. A recent investigation into digital labour in the Global South revealed how refugee camps have become a new point of exploitation for Big Tech and other platforms seeking out cheap digital labour. In Dadaab, one of the world’s largest refugee camps located near the eastern border of Kenya and sheltering a quarter of a million refugees from across the African continent, tents containing hundreds of computers and yards of wires have been erected to facilitate click work by camp residents. As Phil Jones writes, “a day’s work might include labelling videos, transcribing audio, or showing algorithms how to identify various photos of cats”, all of which enable AI technologies to function more efficiently but subject already vulnerable people to meaningless and distressing work without fair labour rights or protections.

Gig Economies

In addition to micro digital labour, the growing AI-driven gig economies such as Uber and Bolt Food, operational across many African countries, pose risks to workers who are offered limited job security – such as insurance, healthcare and leave pay – and are routinely subject to intrusive surveillance methods and the nudging and control of behaviour through algorithmic systems monitoring their daily activities and performance. Kenya has a fast-growing gig economy that has expanded beyond the initial first advantage moves of international platform economies, and with 93,000 gig workers projected to be active in the country by 2023. A study conducted by Mercy Corps: Youth Impact Labs on the emerging gig economies of Kenya, Tanzania and Ethiopia emphasised the need to develop labour protection policies for gig workers, including establishing a sectoral fund as a safety net for circumstances such as Covid-19, which saw many gig workers without work opportunities or labour protections to cover their losses.

The Role of Foreign Companies and Governments on AI in Africa

Alongside the bright spots of transformative AI entrepreneurism evident across the continent, AI technologies developed elsewhere with far less certain benefits for local communities are rapidly appearing. China is, by a long way, the leading exporter of AI-driven technologies to the African continent. The nation’s macro-economic foreign policy, the Belt and Road Initiative, and with it Beijing’s Digital Silk Road Initiative, have been at the forefront of China’s AI expansion into Africa. 40 out of 54 African countries had, by May 2021, signed Belt and Road Initiative agreements that have brought smart city infrastructure, 5G networks, surveillance cameras, cloud computing and e-commerce to many African cities.

AI not only poses a threat to fundamental human rights and political stability, particularly when developed elsewhere without meaningful testing, impact assessment or local skills development; AI technologies also tend to be developed and offered by a small number of multinational monopolies, which undercut local businesses and domestic growth potential. Huawei is a clear example of a dominant market player. But others include those companies able to offer cloud services essential for AI systems and the storage of the huge datasets needed to develop them, such as AWS – the leading cloud services provider globally. The establishment of highly technical cloud services and the massive data centres required to house and power these data-driven services require substantial resources – land, electricity, water and technical skill – which tend to be accessible only to Big Tech.

In South Africa, AWS recently began to establish a new data storage centre in Cape Town, as part of the company’s strategy to locate its regional headquarters at the bottom tip of the continent. The location of the 150,000m2 development is, however, on contested indigenous land of South Africa’s first peoples, the Khoi and the San, originally dispossessed from the area by Dutch colonisers in the 17th century. Despite campaigns to prevent the development, and a court case that is yet to be heard, the promise that the site will generate 8000 direct and 13000 indirect jobs is a compelling counter-argument in a country where, following COVID-19, the official unemployment rate is 46.6%, according to the expanded (and more inclusive) definition of unemployment.

Regulating AI in Africa - Emerging Trends

Countries from across different regions of Africa are increasingly developing or looking to develop national AI strategies to guide AI adoption. Many of these documents follow existing global trends around AI policies, which Tim Dutton has listed as follows:

- Basic and applied research in AI

- AI talent attraction, development and retainment

- Future of work and skills

- Industrialisation of AI technologies

- Public sector use of AI

- Data and digital infrastructure

- Ethics

- Regulation

- Inclusion

- Foreign policy.

Other African countries have AI-relevant policy mechanisms within digital or data policy frameworks. This section provides an overview of these emerging trends, beginning with the national AI strategies of Mauritius, Egypt and Rwanda and the Blueprint for AI in Africa developed by the pan-African government-endorsed group SMARTAfrica. The section then proceeds to discuss some of the developing AI-related policy areas in Africa, before concluding with a sub-section on the opportunities and limitations of policy transfer from other regulatory contexts.

Reviewing Africa’s Existing AI Policy Landscape

Mauritius

Mauritius was the first African country to publish an AI strategy document, publishing their Mauritius Artificial Intelligence Strategy in 2018 as a report of the national Working Group on AI. The document sets out a vision for AI as ‘creating a new pillar for the development of our nation’, advancing socio-economic growth across key sectors. A series of priority AI areas were identified, with extensive suggestions for potential applications and scoping of existing projects. These priority areas are manufacturing, healthcare, fintech and agriculture. Two further areas include AI in the Ocean Economy with smart ports and traffic management and AI in energy management to contribute to reducing CO2 emissions. The close of the report sets out a series of recommendations including provisions for skills development, research and development funding and governance mechanisms such as data protection, open data platforms and the establishment of an AI ethics committee.

Egypt

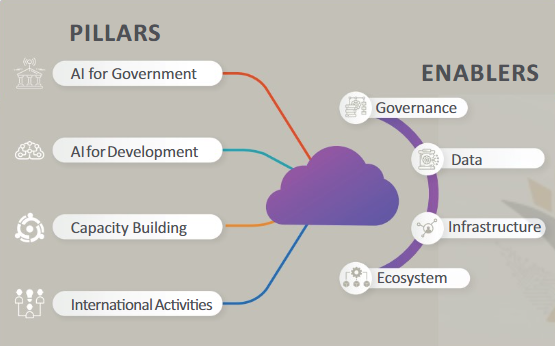

Egypt published a comprehensive National Artificial Intelligence Strategy in July 2021, which is to be implemented over the next three to five years. Commendably, the central concern of the Strategy is to ensure that AI adoption and solutions contribute to realising Egypt’s national developmental priorities; however, it remains to be seen whether the Strategy will address some of the human rights concerns in relation to mass government surveillance and censorship. The Strategy rests on four pillars: to advance the use of AI in government to improve efficiencies, transparency and decision making; to advance the use of AI by the public and private sector in the service of development goals; to build capacities around AI – education, skills and research – across all generations and, finally, to play a lead role in international and regional cooperation around AI through bilaterals, shared commitments and partnerships. These four pillars are to be supported by four enablers: governance, including laws, policies and ethical and technical guidance; data, through both the Personal Data Protection Law, passed in 2020, and a new, yet to be developed data strategy; infrastructure, including cloud computing and data storage; and ecosystems, which includes the institutions, start-ups and talent needed to ensure a responsible and innovative national AI ecosystem.

Figure 3: Pillars and enablers of Egypt's AI Strategy

Rwanda

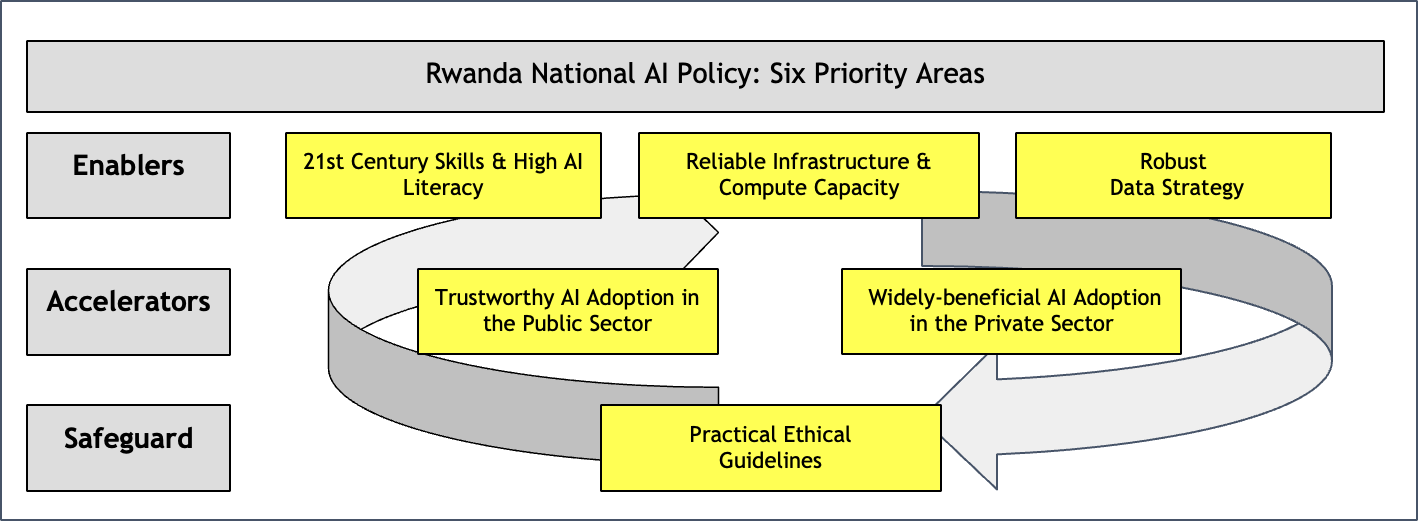

Rwanda is in the process of finalising a National AI Policy, expected to be published in 2022. The Policy has six priority areas, sub-divided into enablers, accelerators and safeguards. Similar to Egypt, Rwanda affords priority to “enablers” such as skills capacity and data availability and will be looking to advance the adoption of AI in both public and private sectors, for the benefit of a wider society.

Figure 4: Rwanda National AI Policy

SmartAfrica Blueprint

SMARTAfrica was established in 2013 by various African heads of state to advance information and communication technologies and digital development on the continent. The organisation has published a series of policy blueprints for African nations to adopt in areas of digital governance, including smart cities and AgriTech. In 2021, SMARTAfrica and the South African government published the Blueprint on Artificial Intelligence for Africa. Expressing AI as a general-purpose technology that affects all levels of society and the economy, the Blueprint sets out five pillars of a successful AI strategy for African countries:

- Human capital, including a technologically skilled workforce and AI talent;

- From lab to market and the design of AI solutions that can be scaled up and attract venture capital investment;

- Infrastructure necessary for developing AI locally, such as access to data and high-powered computing;

- Networking through an expanded ecosystem of public and private bodies and partnerships, including international organisations and industry bodies; and

- Regulations to address emerging challenges and opportunities of AI at national and sectoral levels.

Similar to Mauritius’s AI Strategy, the Blueprint includes key use cases for AI across agriculture, healthcare, education, financial services, energy and transportation and climate change.

It is evident from the above that the emerging AI policy frameworks being adopted in Africa can go a long way to addressing some of the issues outlined in Section 1. They include concerns around data protection raised by digital ID systems, for example, where strong personal data protection laws and effective oversight bodies can play a key role in countering potential harms. Also, the focus on building national AI ecosystems supported by the necessary data and computing infrastructure is key to countering foreign tech influences on the continent and promoting AI solutions that serve local needs.

For labour markets, there is a clear emphasis in the emerging frameworks, and particularly Egypt’s National AI Strategy, on ensuring that human labour is not replaced by AI-driven automation wholesale. However, not enough attention is being paid to the labour rights of digital workers, such as click-labourers or those that work in gig economies. In other areas, the emerging frameworks do not go far enough in addressing the potential that AI holds for undermining democracy, such as through the spread of fake news or inappropriate takedown rules, or violating human rights with, for example, facial recognition technologies or AI activities that negatively impact environmental or cultural rights.

The remainder of this section offers a discussion on some of the developing AI-related policy areas in Africa and the extent to which they can address some of the emergent issues associated with AI outlined above, before moving on to consider the opportunities and limitations of looking to the EU for guidance on AI governance frameworks.

Developing AI-related Policy Areas in Africa

Data Governance

Data are a key building block in AI development. To support the local development of AI solutions that address local developmental problems and priorities, AI developers require access to vast amounts of relevant and quality data. Sub-Saharan African results of the Open Data Barometer showed the region to be far behind other regions in providing meaningful access to public sector data. The focus of the Egyptian and Rwandan AI strategies on data policies and ensuring access to public data are, therefore, to be welcomed. Particularly encouraging is the adoption by the African Union Commission of the Continental Data Policy Framework in February 2022, which underscores equitable access to digital and data-driven technologies for all Africans.

Data protection laws are impacting attitudes toward sharing data that may contain personal information. The European General Data Protection Regulation (GDPR) places strict adequacy requirements on the sharing of personal data outside the European Union (meaning that data cannot be shared with a third party in a country that does not have a level of data protection regulation comparable to the GDPR or without a written agreement to ensure that any third party processes the personal data in accordance with GDPR provisions). Simultaneously, on the African continent, an emerging policy trend sees African countries establishing data protection laws or data policies that seek to keep data within their home territory. The rationale behind this trend is largely economic, insofar as data are recognised as a key innovation resource that drives market efficiencies and economic growth, and data kept in the country can be more readily accessed by local groups. This trend is also a response to many digital companies in the African region bringing limited local economic benefit if they are operating on a digital model without renting or purchasing offices locally or employing local staff.

The Kenyan Data Protection Act, 2019, for example, requires that a copy of all personal data transferred outside the country must also be kept and stored within the country. While the Kenya Data Protection Act pertains only to personal data, a new Draft Data and Cloud Policy from South Africa sets out requirements for a copy of all data generated in South Africa to be stored in the country. This provision of the Draft Policy has, unsurprisingly, been criticised widely, along with other emerging policy choices in the region, including in Nigeria, that appear to be promoting data localisation, creating the conditions to legalise mass government surveillance and demonstrating a data governance regime at odds with best practices related to the stewardship – rather than ownership – of data.

Re-skilling

Typical policy responses to the threat of job loss from automation are also ill-fitting for many African contexts. The major policy response to the threat of job loss is to provide programs to re-skill or up-skill existing workforces. However, a particular issue that has not received adequate attention to date is the impact of automation on job loss for African women, who occupy the majority of positions of low-skilled labour and repetitive tasks and can thus be more readily replaced by automation. The experiences of women tend to be ignored in policy measures developed to address job loss, particularly reskilling programs, which fail to take into account the daily realities of women who bear the burden of domestic responsibilities and have no or limited time to reskill for the digital world. An example here is South Africa’s 2019 White Paper on Science, Technology and Innovation. Equally, it is not clear how far Rwanda’s new AI policy will address the structural challenges faced by women in the digital age. These issues are further exacerbated by what has been found to be a growing digital gender divide within the continent, where, on account of a variety of social factors including deep-set patriarchy, women have poorer access to digital technologies than men.

Social Media

Given the reach of platforms such as Facebook and Twitter, it is essential that African independent human rights and data protection oversight bodies can exercise their mandates to hold such actors to account, where platform-based activities may infringe on human rights and impact political stability. The ability to exercise this mandate is also essential where, conversely, government interference in blocking access to platforms may have a negative effect on political action and democracy. Other countries, including those in the EU, are facing similar challenges in regulating social media and its social impacts. One example of commendable efforts made in this regard is the recent case in the South African Human Rights Commission brought to the South African Equality Court in relation to a fake Twitter account that was promoting racially motivated hate speech on the platform. After extensive engagement with Twitter, the South African Human Rights Commission was provided with the information needed to trace the account’s owner and bring the matter to the Equality Court. In addition, to ensure fair and consistent protocols and practices, social media companies operating in Africa need to take responsibility for ensuring adequate local expertise and language skills in their content-moderation teams.

Policy Transfer: Opportunities and Limitations

In developing policy responses that address the full range of challenges and opportunities associated with AI, African policy-makers can draw some lessons from the EU experiences in foregrounding the protection of fundamental human rights and freedoms and the upholding of democracy. The announcement of the Africa-EU Global Gateway to provide €150 billion of investments in Africa, including in relation to both the green and digital transitions, is a critical step in supporting cooperation between the two regions. Investments in the digital transition are positioned as a top priority and include submarine and terrestrial fibre-optic cables, in addition to cloud and data infrastructures.

EU AI Act

The recently developed EU AI Act, for example, categorises AI applications according to risk levels, where risk corresponds to the scale of the threat to democracy and fundamental rights and freedoms. AI technologies posing a higher risk require more extensive regulatory, ethical and security safeguards. Safeguarding measures include social impact assessments for less risky technologies and independent audits for those that are higher risk. High-risk AI technologies – such as live biometrics – are banned under many circumstances.

Addressing the structural and infrastructural preconditions of AI is essential to building local AI ecosystems that embed local value systems and respond to local problems. In the African region, access is more limited to the vast amounts of data needed to adequately develop and train AI systems. This lack of access is evidenced in the Open Data Barometer, where internet penetration is described as highly unevenly distributed and often associated with neither reliable energy systems to power the high-performance computers required for AI nor computing systems widely available enough to local innovators and entrepreneurs. The AI policy strategies and regulatory responses needed in the region are, therefore, necessarily quite different to those being advanced in the Global North or other parts of the world. Thus, the promotion of policy transfers or setting of standards developed elsewhere do not always fit and can fail to address the structural and infrastructural contexts of African countries.

While the EU AI Act offers a starting point for considering the protection of human rights and democracy, it is insufficient in addressing some of the broader structural concerns with which African AI policy-makers need to contend. The rights-based approach can often focus on individual, so-called, first-generation rights, such as privacy and freedom of expression, and fail to adequately address socio-economic rights, the realisation of which is critical for addressing the threat AI poses to inequality. In addition, the risk model may not capture longer-term, societal harms, such as the denigration of indigenous and local knowledge systems or labour harms associated with click work and the gig economy, or the various environmental impacts of AI.

EU GDPR

In addition, the emergence of regulatory standards with extra-territorial application forces African jurisdictions to comply with provisions that may not be technically or economically feasible, or contextually appropriate. The EU GDPR is perhaps the most powerful legal instrument outside of European territories regulating the processing of personal data, and such data that might ultimately be used when developing an AI system. The GDPR regulates the processing of European personal data, no matter where that processing takes place. In contrast, the South African and Kenyan data protection laws regulate the processing of personal data that takes place only within their respective jurisdictions. Thus, any African entity processing the personal data of Europeans must comply with the provisions set out in the GDPR.

While this compliance may not, on the face of it, be problematic, the GDPR establishes a more rigorous data protection regime than most regulatory regimes of this kind on the African continent, which has two potential implications. First, the level of technical data security protections required by the GDPR may not be available or affordable, particularly for smaller data processers, such as local data start-ups, and so the GDPR may – in effect – deny the entry of these groups into international markets. The GDPR is already having a significant impact on the sharing of data for research purposes between EU countries and countries in Africa. Second, since the GDPR sets a higher level of data protection than the current data protection regimes within the African continent, two levels of data protection are being created where the personal data of Europeans being processed in Africa is protected better against interference and misuse than the personal data of Africans.

Key Policy Considerations for AI in Africa

As African policy-makers consider the best approach to AI strategies and adoptions in their countries, emphasis should lie on building sustainable local AI ecosystems that contribute AI solutions to advancing national developmental priorities and supporting inclusive and prosperous African societies. This approach requires considering the implications of AI beyond the mere economic dimensions. It necessitates critically assessing the extent to which global AI frameworks address the particular challenges AI poses in the region and building policy responses with the involvement of a broad set of stakeholders, including local tech and data entrepreneurs and social justice groups and communities that may be directly affected by AI policies.

Below are a number of key policy areas, developed out of the above analysis, for African policymakers to consider. Attention must be paid at all times to the differential impact of AI on women and groups rendered vulnerable in society, such as refugees, children and persons with disabilities. Policy provisions must be tailored accordingly to ensure that AI adoption is inclusive and does not perpetuate social inequality.

Infrastructure Development

Every effort should be made by African governments to focus policy efforts on building and maintaining safe, secure and inclusive infrastructure to support the local development of AI. This focus includes policies to advance internet access and to prevent local actors from enacting internet shutdowns, as well as policies to support the good governance and availability of data for development. The African Union Data Policy Framework being considered by the African Union will be an important instrument for promoting uniformity in data governance standards across the continent. The Africa-EU Global Gateway should be utilised as a crucial mechanism for establishing equal access to basic – and advanced – digital infrastructure.

Regional Cooperation

Regional cooperation remains an important policy option for developing common regulatory responses for multinational and foreign tech companies operating in the region. This cooperation could also extend to developing taxation provisions for multinational social media platforms, for example. In addition, regional cooperation is also needed to develop data-sharing agreements between countries that provide access to a broader range of public sector data, useful in advancing developmental priorities, for local AI developers. The African Continental Free Trade Area Protocol on E-Commerce may present an important opportunity here for integrating a provision to support inter-regional data sharing and thus support regional development goals and economic growth.

Building Local Capacity and Skills

The development of AI and related data and technology skills amongst policy-makers and workforces on the continent is a key pre-condition for developing and supporting the responsible use and development of AI. Holistic capacity development policies are needed to promote understanding around AI at all levels, with specific policy measures to advance women in STEM and AI-related decision-making positions. Policy provisions in this area may also include measures to attract diverse AI talent by lifting entry barriers into countries for Africans with data science and computing skills.

Community Participation and Beneficiation

With both the enormous economic promise of AI and the society-wide implications of some AI applications (such as biometric ID systems), every effort should be made to involve local communities in decisions around the design and deployment of AI systems that may affect them. In addition, beneficiation frameworks should be developed to support dividends for communities whose data are used in developing an AI system. In this regard, beneficiation should be a key principle of national AI ethical guidelines.

Advancing African Value Systems and Principles in AI Ethics

Within the diverse social and cultural contexts found within the African region, ethical standards for AI should emphasise digital literacy and education, community beneficiation, holistic reskilling programs, access to basic digital infrastructure, protection of minority ethnic communities and promotion of diverse forms of knowledge in developing AI solutions.

International Development Assistance

International development assistance remains an important opportunity for African development. In supporting the adoption of responsible AI solutions on the continent, donors, intergovernmental organisations and other funders should concentrate on supporting efforts to build inclusive digital infrastructure and develop long-term local capacity in AI governance, as is being seen with the Africa-EU Global Gateway investment scheme. A final emphasis must be placed on ensuring African states retain their sovereignty in developing AI governance solutions that are built on national constitutional and related-founding principles and advance the realisation of local development priorities.

Suggested Citation: Adams, R. 2022. ‘AI in Africa: Key Concerns and Policy Considerations for the Future of the Continent,’ Policy Brief No. 8. Berlin: APRI

About the Author

Dr Rachel Adams is the Principal Researcher at Research ICT Africa, where she Directs the AI4D Africa Just AI Centre, is the Project Lead of the African Observatory on Responsible AI (AO4D) and is the Principal Investigator of the Global Index on Responsible AI. Rachel has published widely in areas such as AI and society, gender and AI, transparency, open data, and data protection. Rachel is the author of Transparency: New Trajectories in Law (Routledge, 2020), and the lead author of Human Rights and the Fourth Industrial Revolution in South Africa (HSRC Press, 2021).